Projects

Check out my Github profile for a complete list of my projects.

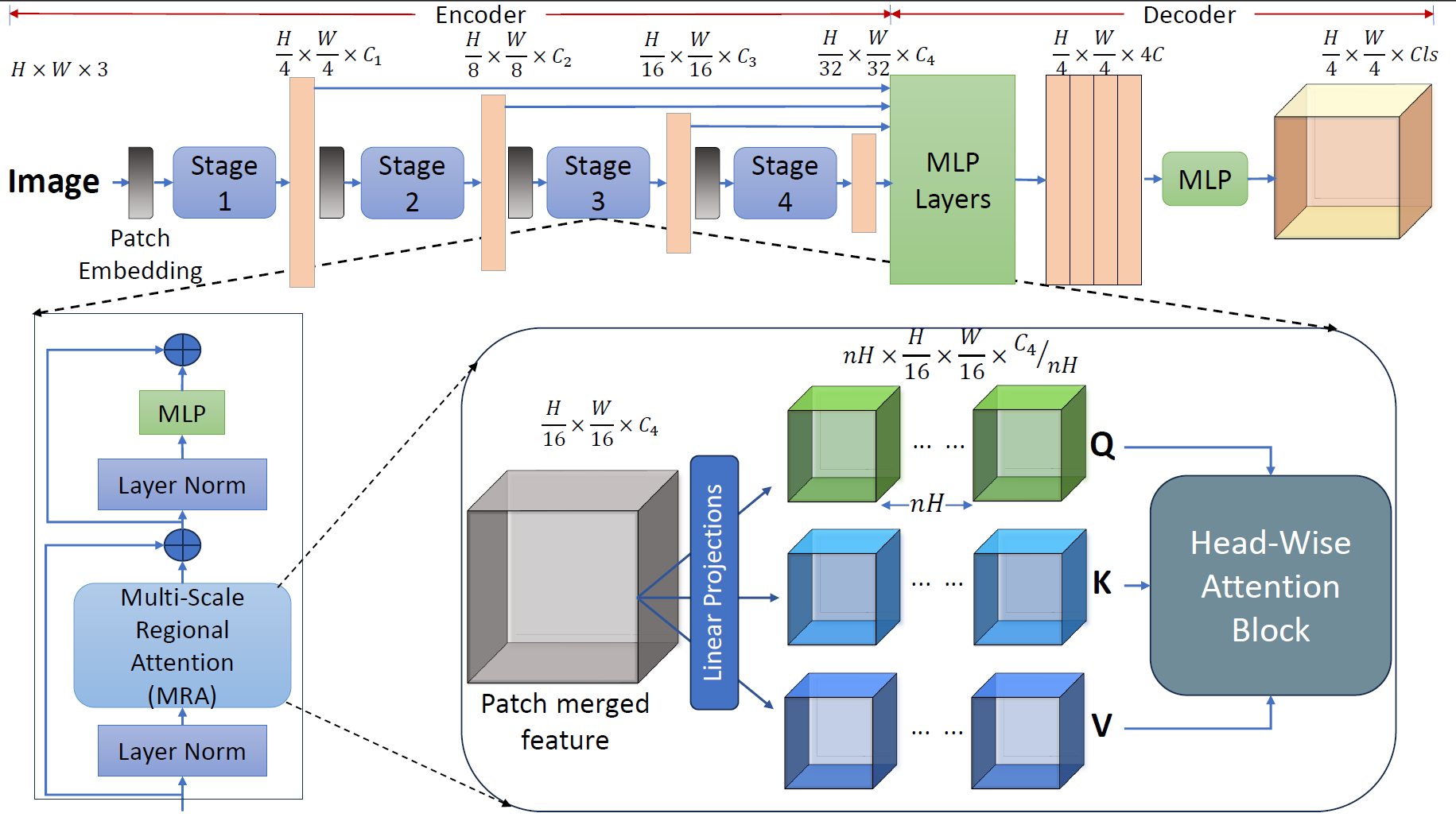

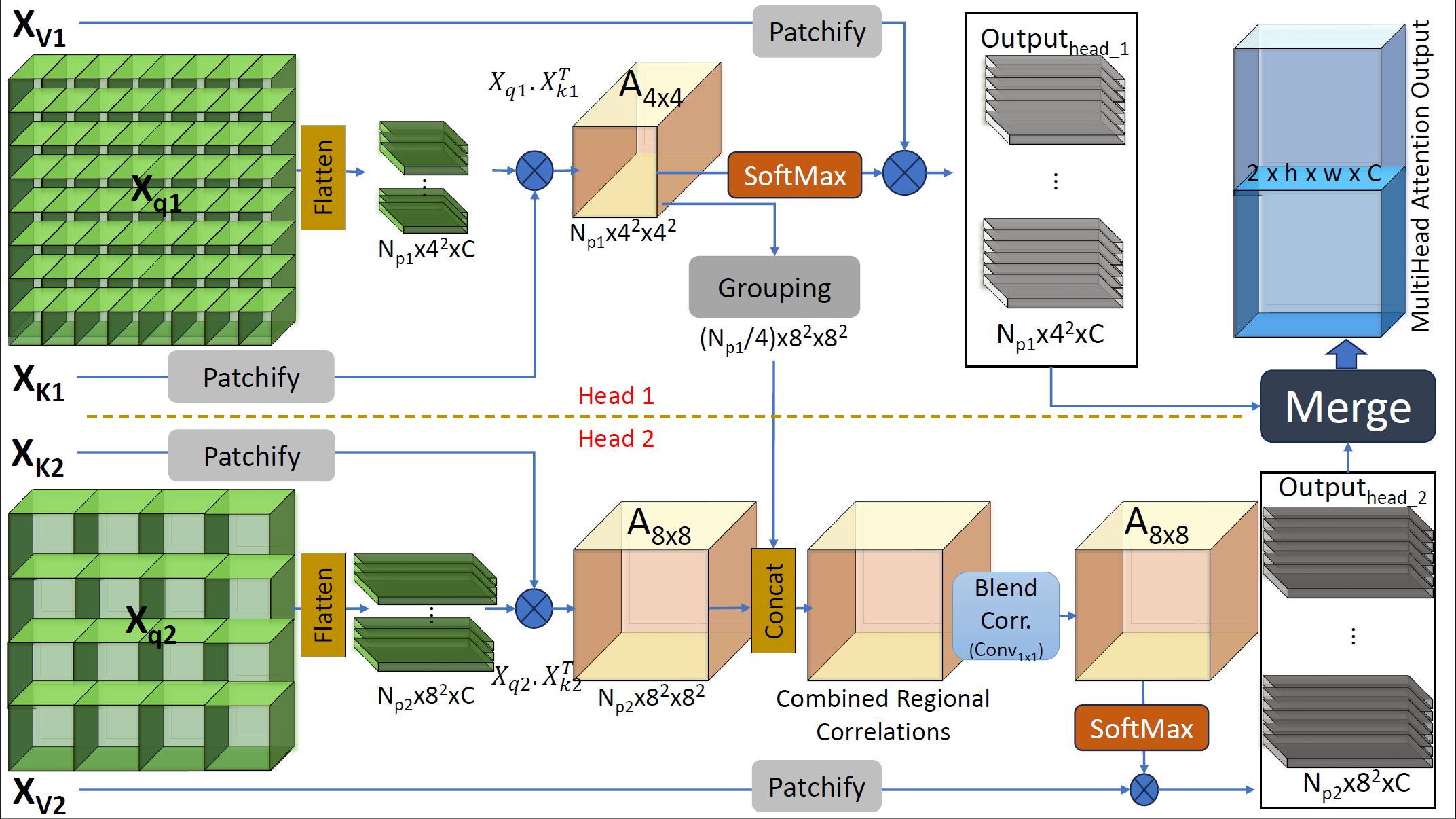

MRA adds multi-scale regional self-attention to Vision Transformers, letting each pixel attend to coarse-to-fine neighbourhoods. It slashes quadratic cost while matching SOTA segmentation accuracy on Cityscapes and BraTS.

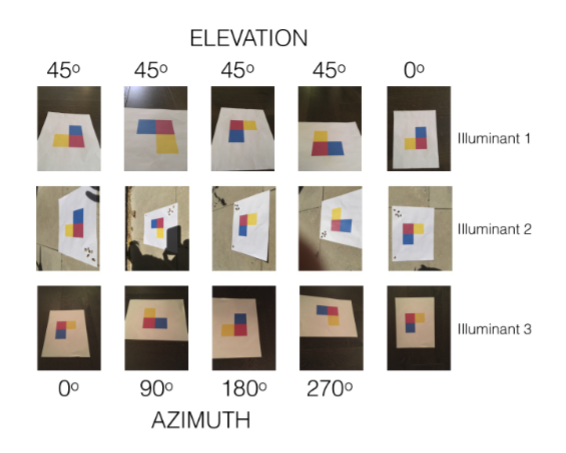

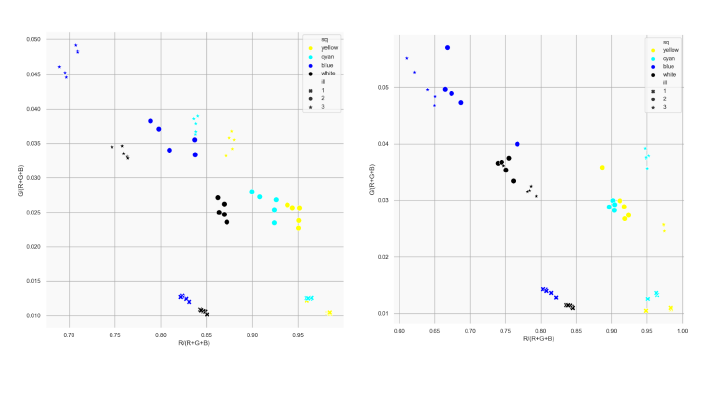

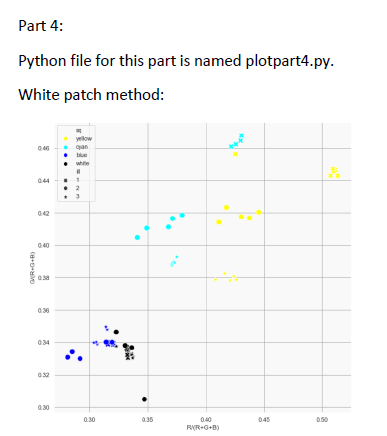

Empirical study of colour constancy under variable illumination. Includes sensor calibration and controlled Google Pixel 3a experiments.

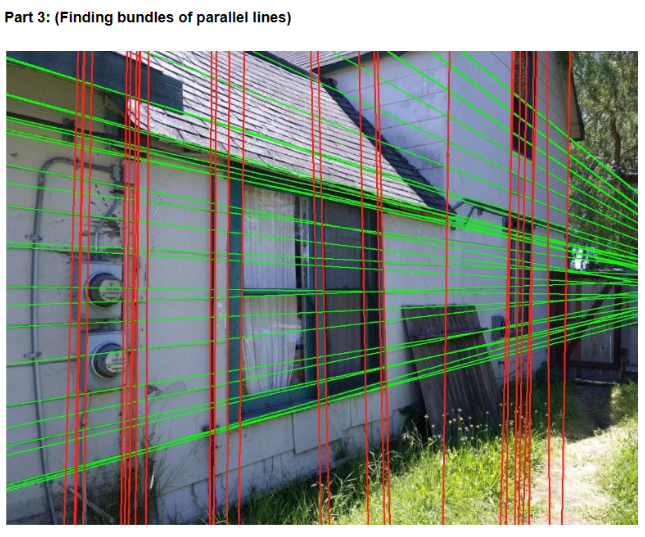

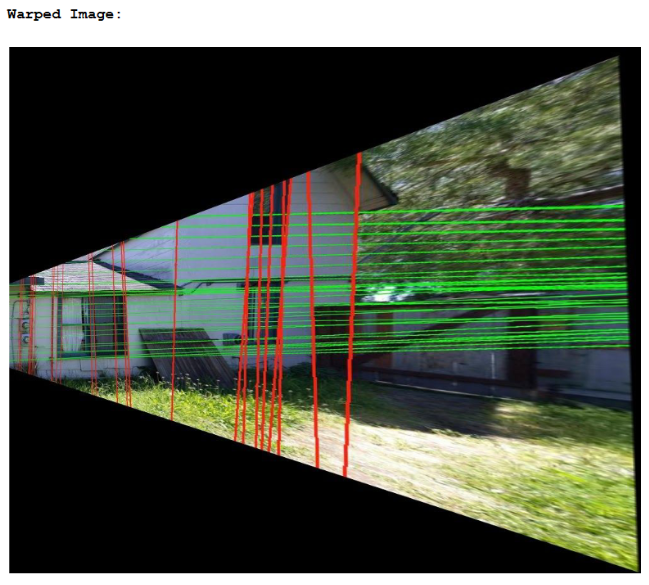

Geometric rectification of architectural photos using camera calibration, vanishing-point estimation, and homography for sub-pixel façade alignment.

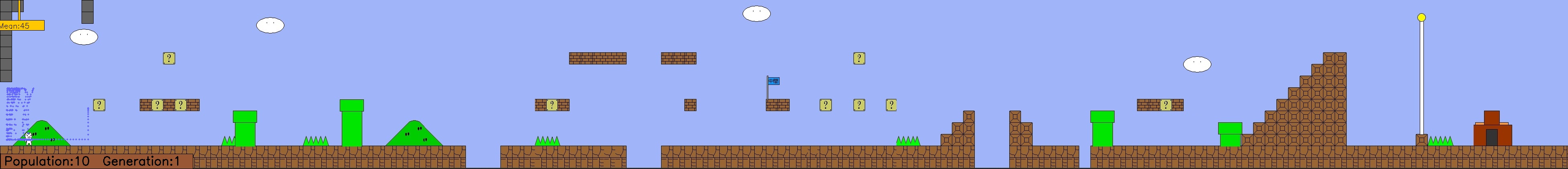

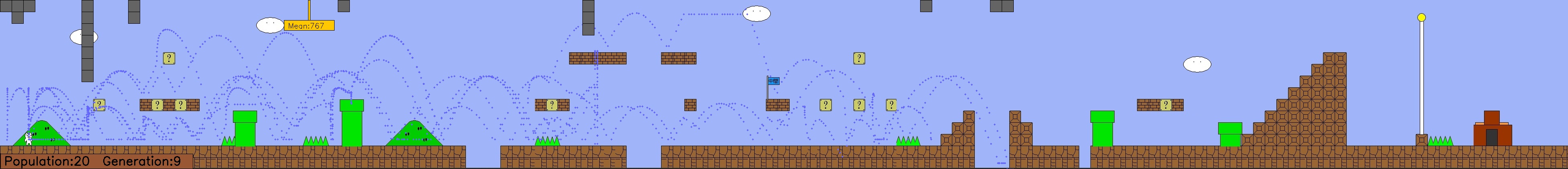

NEAT-based RL agent that masters Cat Mario from raw video—drawing inspiration from MarI/O and CrAIg.

▸ VIM-RL

Multi-agent RL framework that guides a generic driving policy with specialist experts, producing 44% safer trajectories in occluded-pedestrian scenarios.

“LLaMA-3.2 × Perfetto” traces every compute and memory step of a single transformer block—dot-products, RoPE, KV-cache I/O, MLP, layer norms, residual adds—and visualises them in Perfetto with rich tensor metadata. Quick start: run `setup.sh`, download weights via HF-CLI, then `torchrun load_model.py` to emit a Perfetto JSON trace.

WaveFormer leverages discrete wavelet transforms to capture multi-scale token interactions in hierarchical transformers. It tops the leaderboard on FLARE, KiTS, and AMOS while cutting FLOPs.